I recently stumbled on this article from IEEE Spectrum, The Top Programming Languages 2025 Does AI mean the end for the Top Programming Languages?. The article makes a few points I agree with, but ultimately suggests we might be headed towards a reality where higher level programming languages aren't even necessary anymore. At this point in the AI hype cycle, I don't see that becoming reality, and I'll explain why in this post by going point by point through Spectrum's article.

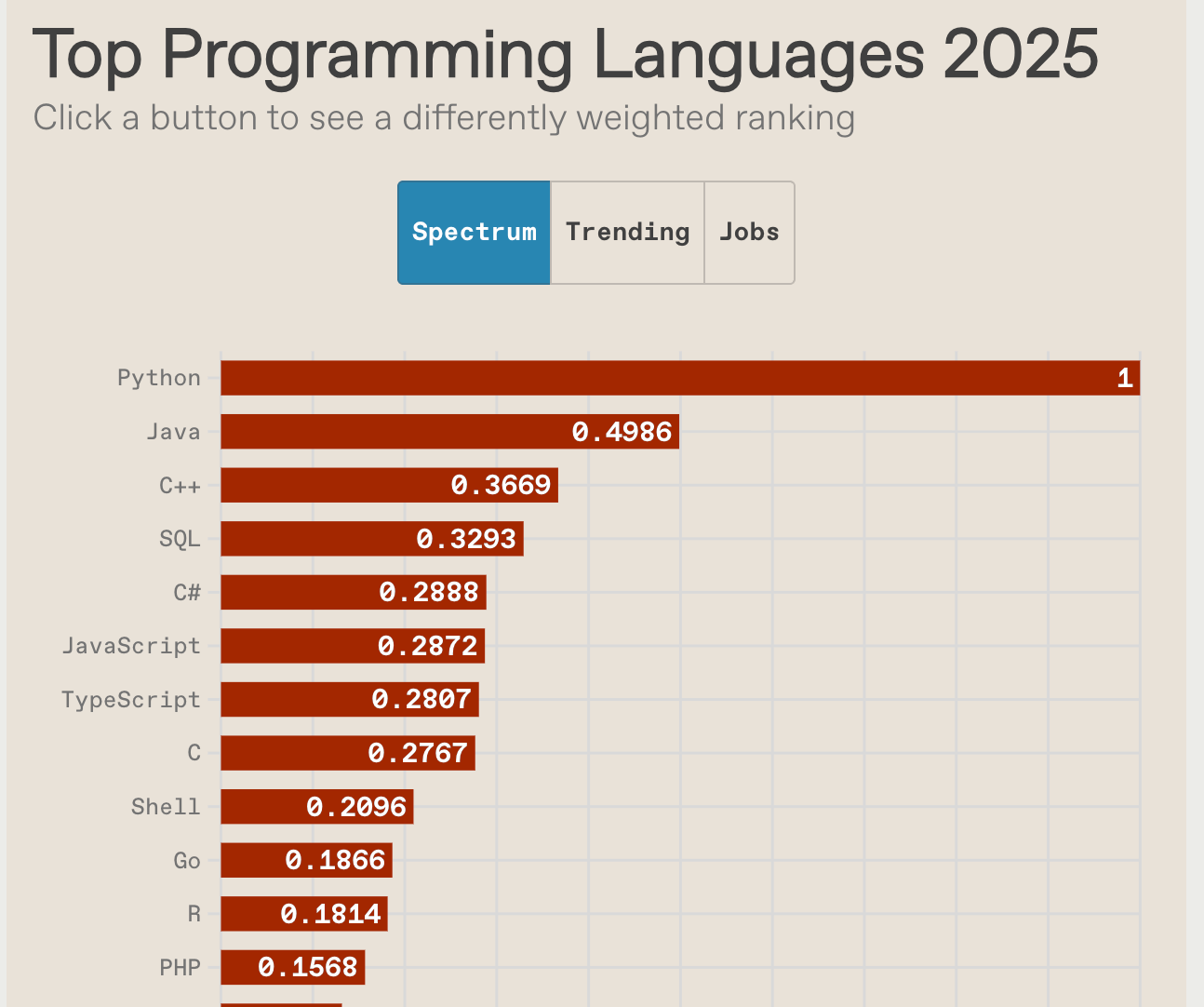

We start off with the results of Spectrum's survey. Check the article for their full methodology, but they essentially break down the results into the Spectrum, Trending, and Jobs rankings. The "Spectrum" ranking is weighted towards IEEE members, Trending takes things like Google search results and GitHub repositories into account, and Jobs is somehow measuring demand for different languages from IEEE's job board and others. All three have Python in the top spot.

In the “Spectrum” default ranking, which is weighted with the interests of IEEE members in mind, we see that once again Python has the top spot, with the biggest change in the top five being JavaScript’s drop from third place last year to sixth place this year. As JavaScript is often used to create web pages, and vibe coding is often used to create websites, this drop in the apparent popularity may be due to the effects of AI that we’ll dig into in a moment.

I have to take a moment here to air a grievance I have with this and a couple other "top programming languages" surveys I've seen floating around. Why in the world are JavaScript and TypeScript treated as separate languages in these surveys? I understand that they are technically different languages, but in my opinion, they should be treated as the same. In 2025, TypeScript feels like a lot more than just another language that transpiles to JavaScript a la CoffeeScript from back in the day. TypeScript might not always be this entrenched in the vast JS ecosystem, but I feel like adding a bit of this context into surveys like this would make the results more meaningful. In my opinion, JavaScript's drop from third last year to sixth place this year has less to do with vibe coding, and is obviously due to the fact that TypeScript has become the default way to write JavaScript. AI has probably even had a hand in driving this trend. When you look at the Jobs ranking, JavaScript remains solidly ahead of TypeScript. Since job postings will lag behind the trends in the general development zeitgeist, I think this supports my conclusion.

Okay, let's move on. Quibbling about the actual results of this survey wasn't the point in me writing this, I just had to get that annoyance off my chest. Let's get into the meat of the article:

After all, the whole reason different computer languages exist is because given a particular challenge, it’s easier to express a solution in one language versus another. You wouldn’t control a washing machine using the R programming language, or conversely do a statistical analysis on large datasets using C.

But it is technically possible to do both. A human might tear their hair out doing it, but LLMs have about as much hair as they do sentience. As long as there’s enough training data, they’ll generate code for a given prompt in any language you want. In practical terms, this means using one—any one—of today’s most popular general purpose programming languages. In the same way most developers today don’t pay much attention to the instruction sets and other hardware idiosyncrasies of the CPUs that their code runs on, which language a program is vibe coded in ultimately becomes a minor detail.

There's an analogy here that I've seen made almost anywhere agent coding is discussed. It's stated slightly differently here, but the idea is that the move from handwriting code to prompting an AI that writes code is similar to the move from assembly code to high level languages.

On one hand, I see where this analogy is coming from. It's easy to look at writing English to produce (mostly) working artifacts as the last step in the progression we started when abstracting assembly code into general purpose languages. For me, that's about where the similarities end, and I think it's this incorrect analogy that is misleading many down the road of believing AI will make programming languages irrelevant. Using AI to generate code that becomes part of your direct codebase and needs to be maintained is very different from compiling or interpreting code using hard dependencies.

Consider everyone's favorite language... Java. When Java abstracted away manual memory management and gave us the JVM, developers stopped worrying about pointer arithmetic, memory leaks, and platform specific hardware issues because those concerns were solved by the abstraction. However, Java code generated with an LLM can't be treated like compiled bytecode. You as the developer still need to verify it fulfills your requirements, doesn't introduce security vulnerabilities, handles edge cases, and fits in with existing architectures. On one hand you have a guaranteed (or nearly guaranteed) abstraction, and on the other, you have a living artifact that still requires your expertise to validate and maintain. And that's the best case scenario. More often than not, what you actually have is a rough first draft.

So if the AI-as-the-next-generation-compiler isn't the correct analogy, what is? Don't overthink it! The simple analogy of we as developers leveling up into architects and product designers with AI agents doing most of the hands-on coding is the correct way to think about this trend in my opinion.

So how much abstraction and anti-foot-shooting structure will a sufficiently-advanced coding AI really need? A hint comes from recent research in AI-assisted hardware design, such as Dall-EM, a generative AI developed at Princeton University used to create RF and electromagnetic filters. Designing these filters has always been something of a black art, involving the wrangling of complex electromagnetic fields as they swirl around little strips of metal. But Dall-EM can take in the desired inputs and outputs and spit out something that looks like a QR code. The results are something no human would ever design—but it works.

Similarly, could we get our AIs to go straight from prompt to an intermediate language that could be fed into the interpreter or compiler of our choice? Do we need high-level languages at all in that future? True, this would turn programs into inscrutable black boxes, but they could still be divided into modular testable units for sanity and quality checks. And instead of trying to read or maintain source code, programmers would just tweak their prompts and generate software afresh.

Now, I attempted to read the abstract of the Dall-EM paper from Princeton, and could barely understand any of it. But from what I can gather, this process of designing electromagnetic filters is verifiable. In other words, it's possible to create a design, run some simulations, and come up with a thumbs up or thumbs down on if it works or not. I'm guessing at a high level (gross oversimplification incoming), this is how the Dall-EM AI is able to weed out unworkable solutions until it comes to the most optimal.

I think that applying our current LLM-based coding agents in this way is a leap too far. An agent is able to create a web page with a form that submits some data to a server, and can even connect to the browser via MCP and verify that the form submits without error. However, putting this in the larger context of a shopping cart in an ecommerce platform introduces all kinds of other variables that must also be considered. Is the UI user friendly? What happens when the item went out of stock between the time the user added the item to their cart and clicked check out? Can we make the time from submission to order confirmation faster? On and on and on. Trying to keep up with all of these competing requirements using prompts in plain English alone, and then getting slightly different results upon each successive attempt in generating the code sounds like a nightmare to me. Perhaps I'm missing some obvious revelation or blind to the next coding agent tooling innovation, but I still believe the ability for humans to read and understand the code is vital.

Instead, let's make it easier for us humans to interact with our team of coding agents, and verify their output. The Claude Code documentation lays out a workflow for using Git worktrees to support multiple agents running simultaneously. We should work to standardize and secure MCP tooling so that we can give agents tighter integration into our environments. Laravel Boost is a great example of this. When I use Claude Code in a Laravel app with Boost installed, the AI is able to inspect my database schema, test implementations with Tinker, and run tests. I believe that leveraging our existing expertise and software engineering practices and allowing AI to supercharge our productivity is the path forward.

Of course I could also be wrong. That's the joy of living in this AI age. No one knows what's coming next. The best thing to do is learn and adapt alongside these tools while building things you're passionate about. That's my plan, anyway.

Why Programming Languages Aren't Going Anywhere

Some are suggesting we're headed towards a reality where higher level languages aren't necessary anymore